No, it’s not a typo: Nvidia’s legendary Geforce 8800 GTX card, which is the topic here, is so old that it’s now gone. RTX

Sharing abbreviations GTX

Just after the product number. But even more interesting is the question of why it is considered legendary at all?

Unsurprisingly, she owes this primarily to her phenomenal achievements at the time. When it was released in late 2006, it beat ATI’s current competitor, the Radeon X1950 XTX, by an average of 64 percent in our benchmarks with a 1920×1200 resolution.

Even the Radeon HD 2900 XT from ATI or AMD, which appeared about half a year later as the answer and which ATI acquired in mid-2006, couldn’t change anything about the 8800 GTX’s superiority.

Combined with improved picture quality, compelling efficiency, and quiet fan design, the classic 8800 GTX is also one of the most exceptional in the history of GameStar hardware editors and all their testing over the past 25 years. Or put it in our test words at the time: Masterpiece

.

So we’re using our current anniversary to conduct the GameStar test of the 8800 GTX not only for reading in the magazine archive with a GameStar Plus subscription, but also as a freely available online test – if your old graphics card is worth it, the 8880 GTX. And if you need a little more retro feel, take a look at our timelines for AMD and Nvidia graphics cards:

Our original test from GameStar 01/2007

First things first: Nvidia’s new hardware, the Geforce 8800 GTX, runs much faster than ATI’s top model, the Radeon X1950 XTX — in some cases by 50 percent! At the same time, the first DirectX 10 graphics card sets new standards in image quality, power efficiency, and fan design – a masterpiece.

We chase the Geforce 8800 GTX from MSI, which is very expensive at 600 euros, and likewise the Asus Geforce 8800 GTS (500 euros) through our benchmark course and compare it with the Radeon X1950 XTX and Geforce 7950 GX2.

This brings DirectX 10

Since the introduction of DirectX 9, there has been no such strong interference with the internal architecture of the graphics processor and its theoretical capabilities as now with DirectX 10, which will be released at the beginning of 2007 with Windows Vista.

DirectX 10 extends the flexibility so that shaders can handle almost any computational task they are assigned to. In addition to physics (more on that later), this also includes scientific calculations. Accordingly, Nvidia publishes the use of GPUs in this environment.

Part of Shader Model 4.0 and the most exciting change in DirectX 10 for gamers is a new and third type of shader, called Geometry Shader. Effective displacement mapping allows, for example, more rough surface structures than previously known parallax occlusion mapping. Geometry Shader deals with the effects of real-time transformation or any other imaginable modification of geometry, as well as calculating shadow volumes.

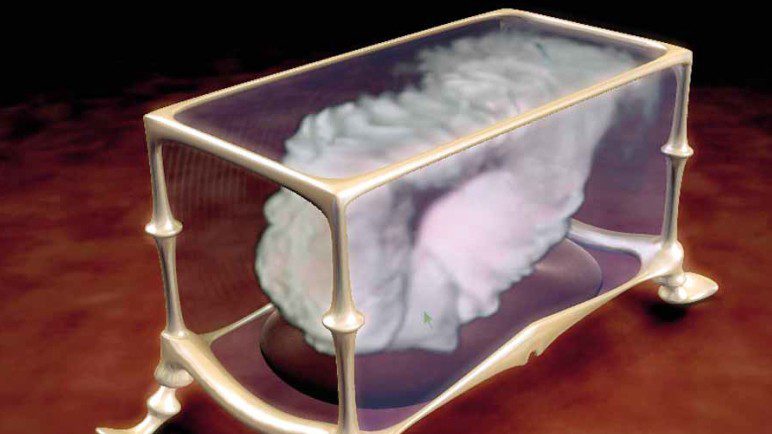

Froggy demonstrates how animations can be calculated directly in the graphics processor using a feature called Stream-Out. By the way: All Geforce 8 demos except Waterworld run on Windows XP using OpenGL.

DirectX 10 also increases the demands on the graphics chip in terms of maximum shader program length and cache sizes. The maximum texture resolution increases from 2048 x 2048 to 8192 x 8192 pixels.

An essential, though not mandatory, feature of the new programming interface is the so-called Unified Shader, which was first implemented by ATI in the Xenos graphics chip for the Xbox 360. Contrary to many rumors, Geforce 8 is also based on these synchronous shaders, Clearly separate arithmetic units for Vertex – and pixel arithmetic are outdated.

This significantly increases gaming performance: if pixel work is done mainly using traditional shader architectures, then the head units are idle and vice versa. Unified shaders share computing work depending on the size and thus make better use of the available resources.

Geforce 8 details

With Smoke Sim, Geforce 8 takes care of the full physical computation and smoke display.

The Geforce 8800 GTX and GTS both use the all-new G80 graphics processor, which is made up of 681 million transistors – more than double the G71 of the Geforce 7900 GTX (278 million). The G80 is the first ever graphics chip that meets the comprehensive requirements of DirectX 10.

128 unified shader processors, of which only 96 are active in the GTS, handle the head, geometry and pixel calculations. For the purpose of optimal use, the G80 distributes the image to be computed into individual arithmetic units, taking into account the use of existing shading.

Basically, this is nothing more than when a game makes use of more than one main processor – the more efficient this technology, called threading, the better the use of resources. By the way, ATI has been doing this since the Radeon X1000 generation.

DirectX 10 Waterworld rendering impresses with rough surfaces and GPU physics calculations.

A special feature of the Geforce 8 is the different clock frequencies of the shaders and the rest of the chip. While GTX shaders run at 1,350MHz, other assemblies lag at 575MHz; GTS rates are 1200 or 500MHz, the Radeon X1950 XTX runs constantly at 650MHz.

Nvidia expanded the memory connection, which is important for gaming performance, from 256 to 384 bits compared to the 7900 GTX, and increased video memory from 512MB to 768MB. Since only ten chips are used instead of twelve memory sticks on the cheaper GTS, the memory connection is only 320 bits wide and the memory size is 640MB.

Both Geforce 8 boards have two SLI connectors, one for the classic SLI. Geforce 8 can also be connected to the sec, but as a dedicated physics card. Geforce 8’s DualLink DVI image output supports 30-inch monitors with a resolution of up to 2560 x 1600 pixels, as well as HDCP copy protection for upcoming HDDVD and Blu-ray media.

» Continues on the second page including game standards

Lifelong foodaholic. Professional twitter expert. Organizer. Award-winning internet geek. Coffee advocate.